AI is the driving force behind today’s digital landscape, and as companies become increasingly reliant on AI strategies, data quality is more important than ever. Clean, accurate, and well-structured data isn’t just convenient—it’s essential for reliable results and informed decision-making.

In this article, we’ll explore how AI is transforming data quality standards across industries, examine some powerful tools that can revolutionize your data processing and insights, and share best practices to maintain high data quality in AI projects.

How AI is redefining data quality in some industries

As AI has gone beyond simply automating tasks or analyzing vast datasets, the relationship between AI and data quality has become less one-sided. AI itself is also reshaping how we define and improve data quality, especially in sectors where information accuracy is non-negotiable.

eCommerce

eCommerce businesses now use AI to create personalized shopping experiences, manage inventory efficiently, and segment customers. Advanced algorithms analyze consumer behavior and purchasing patterns to deliver targeted product recommendations and optimize marketing campaigns.

But these benefits only materialize if the underlying data is spotless. When eCommerce companies feed their AI clean, structured, and current data, it generates valuable insights that drive tangible improvements in sales and customer loyalty. AI has thus changed the way these companies capture and process data, raising the bar for quality standards across the industry.

Law, finance, and insurance

In legal, financial, and insurance fields, data quality in AI is tied to security and regulatory compliance. These industries use AI to sift through large volumes of documents, spot fraudulent activities, and assess risks automatically.

Legal teams now use natural language processing, for example, to review contracts and flag potentially problematic clauses, while finance and insurance companies rely on precise data to set fair premiums and manage risk exposure. Throughout these sectors, AI doesn’t just speed up processes—it ensures decisions are based on verified, consistent information.

H3: Tech and software

Software and technology companies leverage AI to develop innovative solutions, for everything from detecting system anomalies to generating code automatically. Their ability to analyze vast datasets helps optimize development, anticipate bugs, and enhance user experiences.

For tech companies, quality data isn’t optional—it’s the foundation that ensures algorithms work correctly and adapt quickly to new scenarios. By integrating advanced analytics and generative AI, tech firms can create accurate prototypes, simulate complex scenarios, reduce margins of error, and accelerate innovation cycles.

Best generative AI tools to optimize data processing & insights

Advances in AI have led to a new generation of tools designed to improve how we process and interpret data. These solutions don’t just automate tedious tasks—they generate synthetic data and predictive models that uncover hidden patterns. Here are some of the most cutting-edge tools transforming data work across industries:

Data processing tools

- Team-GPT lets you customize generative AI for data analysis, automating complex machine learning and deep learning tasks. Its pattern recognition and data classification capabilities support strategic decision-making, while also extracting valuable insights and generating customer-focused recommendations to improve operational efficiency.

- Julius AI focuses on creating visual representations of data and making accurate predictions. Its statistical modeling capabilities enhance the quality of analysis, while its chat interface enables real-time data queries—perfect for industries where decisions can’t wait.

- Alteryx specializes in data preparation, cleaning, and integration through automation. Its AI capabilities streamline workflows and suggest process improvements, while its predictive tools help forecast trends. By boosting analytical efficiency, this tool identifies process inefficiencies to help companies take proactive actions.

Data analysis tools

- Microsoft Power BI with Copilot transforms data analysis by generating high-quality reports and visualizations through natural language queries. It automatically detects trends and outliers, suggests next analytical steps, and provides further insights based on findings, streamlining the workflow for analysts and data managers.

- Qlik Answers integrates generative AI directly into dashboards and reports, enabling real-time interaction. It provides automated summaries of key data and allows users to generate reports using natural language, making insights accessible without requiring specialized knowledge.

- DataRobot is a pioneer in generative AI for advanced analytics. It automates the development and deployment of machine learning models while optimizing model selection and fine-tuning hyperparameters, dramatically reducing the time needed to develop more accurate predictive systems.

| Tool | Automation | Predictive Analysis | Data Visualization | Process Optimization |

|---|---|---|---|---|

| Microsoft Power BI | ✔️ | ✔️ | ✔️ | ✔️ |

| Qlik Answers | ✔️ | ❌ | ✔️ | ✔️ |

| DataRobot | ✔️ | ✔️ | ❌ | ✔️ |

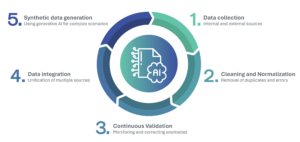

TOP 5 practices for ensuring data quality in AI

Maintaining data quality in AI is an ongoing challenge that demands well-defined strategies and best practices at every stage—from collection to processing and analysis. Let’s take a look at the five key practices to keep your AI systems performing at their best.

Develop a data cleaning and normalization process

Start by establishing a systematic process to remove errors, duplicates, noise, and inconsistencies from your data. For industries like eCommerce, where customer information comes from multiple channels, proper normalization creates accurate customer profiles. In legal and financial contexts, clean data ensures efficient risk analysis and regulatory compliance.

Implement protocols for continuous validation

Continuous validation is essential for maintaining data integrity. Implement monitoring systems that actively detect anomalies or deviations and alert teams to address issues before they affect AI outcomes. Regulated industries like finance and insurance especially benefit from continuous validation to uphold quality standards and ensure regulatory compliance.

Integrate a wide variety of high-quality data sources

Combine internal data with carefully selected external sources to give your AI a more complete picture. Tech companies that integrate diverse data channels can innovate more effectively and anticipate market shifts. The key is being selective—choose sources that genuinely enhance your analysis.

Use generative AI to create synthetic data

When real-world data is limited or biased, generative AI can create synthetic alternatives that simulate realistic scenarios. In eCommerce, for example, companies can use synthetic data to predict buying patterns during peak seasons, while legal and financial institutions can run risk simulations without exposing confidential information.

Build a culture where data quality matters to everyone

Finally, companies should foster an environment where data quality in AI is everyone’s responsibility. Train teams on best practices for data management and encourage collaboration between IT specialists, analysts, and business units. When departments communicate openly and work together, it ensures a shared understanding of the importance of maintaining high data quality standards, leading to better decisions and a stronger competitive edge.

The quality of your data directly impacts the accuracy of your AI results, model precision, and decision-making capabilities. At Linguaserve, we combine cutting-edge technology with data excellence to help businesses enhance their international communications. If you’re ready to optimize your processes and unlock AI’s full potential, let’s discuss how we can help you reach your goals. Remember: AI’s future depends on your data quality, and there’s no better time to act than now.